0x00

本文主要结合代码介绍Android O 引入的新加固与缓解机制的原理与影响,Android官方的介绍请戳这里

这次Android引入的内核安全机制主要有:

- PAN

- Hardened usercopy

- Kalsr

- Post-init read-only memory

0x01

1.PAN

PAN(Privileged Access Never)的主要作用是防止内核任意读取用户态数据,在arm平台引入PXN以后,内核态不能直接执行用户态的代码,这让攻击方利用的漏洞难度变大。目前出现了多种PXN bypass的技术,其中一种就是在用户态伪造内核结构体的方法,在我前面的分析的CVE-2016-2434 poc中就利用了这种方法。可以学习360的spinlock2014,pjf等在mosec上的topic—Android Root利用技术漫谈:绕过PXN.

这种方法的关键是在用户态创建伪造的很和结构体,并将内核结构体指针指向这个伪造的结构体,内核不需要执行用户态代码,但需要读取用户态数据。PAN则会限制这种行为。

由于要armv8.1指令集才会支持PAN,所以Android在kernel代码中对armv8.1以下的cpu做了PAN Emulation.

1.1 PAN (armv8.1)

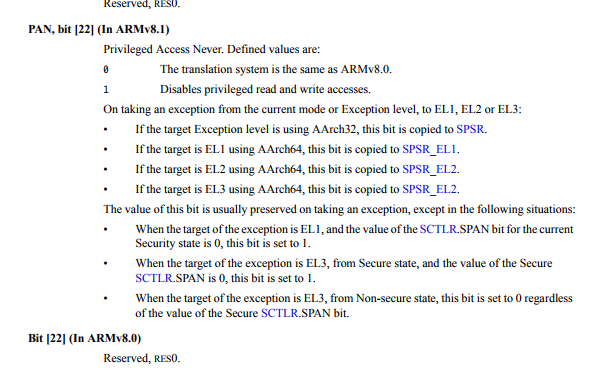

在armv8.1的硬件支持中,系统不需要做什么特别的改动,原理是通过在CPSR寄存其中放一个PAN标志位实现的。在armv8.1的文档中搜索PAN就可看到实现。

1.2 PAN Emulation

ARM64:

新增了CONFIG_ARM64_SW_TTBR0_PAN选项来配置是否开启PAN,通过uaccess_ttbr0_disable禁止内核态向用户态的访问,原理是将ttbr0寄存器置为0,ttbr0实际上保存的是一级页表的地址,所以ttbr0被置零以后,内存页寻址失败,PAN生效。

ARM32:

arm32的PAN emulation实现方式不同,是通过设置访问属性实现,这种方法更简单,但是在arm64上性能较差,所以arm64未采用

2.Hardened usercopy

许多漏洞的原因是程序员书写代码时在copy_*_user时,边界检查不严,比如之前分析的CVE-2016-5342 poc.Hardened usercopy 在copy_*_user函数内部对拷贝的位置和长度进行检测.检测函数check_object_size.

check_object_size中最终调用__check_object_size

3.Kalsr

由于目前kernel没有进行随机化,所以在kernel编译好之后所有的内核符号地址都已经固定,之前也分析过如何从kernel中提取符号地址.Android kernel 4.4 版本中加入kalsr.使内核地址随机化.

在bootloader启动kernel的时候,会通过FDT向kernel传入一个seed,在kenrel启动的过程中在kaslr_early_init中利用这个seed计算一个random size

用这个值来对kernel进行随机化(也就是每次加载的基址会在默认基址上偏移random size)

在kaslr_early_init中,获取fdt中的seed,并用seed计算随机化的offset

ps:在kaslr_early_init中,还对内核模块加载的基址进行随机化,防止在模块中泄露内核的地址。并且提供一个CONFIG_RANDOMIZE_MODULE_REGION_FULL选项,用来保证内核模块加载地址与内核加载地址完全无关

4 Post-init read-only

Post-init read-only 是用来限制利用vdso区域部署shellcode的措施,VDSO(Virtual Dynamically-lined Shared Object)是由内核提供的虚拟so,所有程序共享,用于支持快速系统调用,如getcpu,time等时间要求较高的系统调用. 由于这段空间可写,有的exploit在漏洞利用的过程中,通过patch这段区域中的代码,将自己的shellcode在其中部署并执行.Android上的有一个dirtycow的poc就是利用这种方法提权.更多vdso的利用细节可以查看给shellcode找块福地- 通过VDSO绕过PXN.

post-init read-only机制在这段区域初始化之后将其设置为只读。

|

|